ai is taking over the world, but don’t worry—you can still outthink it

stop learning the redundant stuff in the age of generative AI

Estimated reading time: 22 minutesIt is scary, that AI is slowly but steadily mastering every skill known to humankind—from composing symphonies and diagnosing cancers to drawing paintings and writing poems—you name one thing; someone is probably training AI to do that if they have not done it already.

So, why bother learning anything at all?

After all, AI will do everything faster, better, and without needing to sleep, eat, or take bathroom breaks. So, the only reasonable solution is to stop learning.

You don’t think that’s true, do you? No, please don’t stop learning; more accurately, to adapt to the future ahead of us, we need to unlearn the wrong things and focus on skills that AI can’t replace.

The secret to thriving in an AI-dominated world isn’t learning more facts—it’s learning the quirky, unpredictable, and profoundly human skills that machines will never perfect. Here’s a tongue-in-cheek guide to what you really need to “unlearn” and, ironically, learn in the age of generative AI.

1. how to know what to not learn anymore

Artificial intelligence (AI) might sound like something out of a science fiction movie, but it’s simply a technology designed to mimic human intelligence.

At its core, AI operates by analyzing vast amounts of data, recognizing patterns, and making predictions or decisions based on that information.

Imagine teaching a child how to identify animals by showing them pictures of cats and dogs. You label each picture, and over time, the child learns to recognize the differences between the two.

AI works similarly, using algorithms—essentially, a set of instructions or rules—to process data and learn from it. For instance, if you fed an AI thousands of cat and dog pictures, it would learn to identify each based on the features you’ve provided.

Based on those mechanics, one of the key strengths of AI lies in its ability to handle repetitive tasks.

Think about how tedious it can be to enter data into spreadsheets or analyze long lists of numbers. AI can perform these tasks much faster and with greater accuracy, freeing up humans to focus on more creative or strategic work.

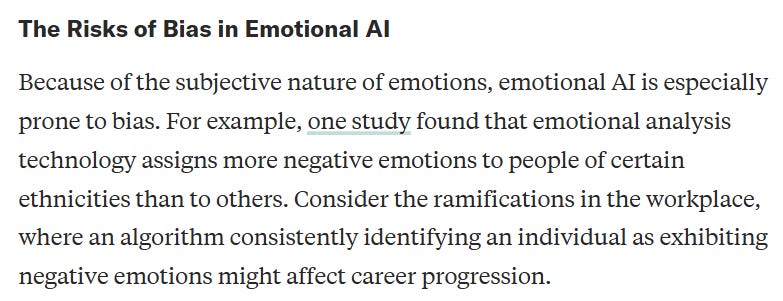

However, while AI is great at handling structured tasks and analyzing data, it has limitations when it comes to understanding and creativity. Take this as an example: AI can generate art or write poetry, but it doesn't truly understand emotions or the cultural context behind its creations. The current system mechanics does not allow it to do so.

Areas that require empathy, intuition, and complex decision-making—like therapy, leadership, and negotiations—are challenging for AI to replicate. In these roles, the human touch is invaluable, as it involves understanding feelings and social dynamics that a machine simply can't grasp.

So, how can we use this knowledge about AI to guide our learning? First, it’s important to focus on developing skills that AI cannot easily replicate.

Critical thinking, creativity, and adaptability are crucial areas to invest in. For example, if you're interested in a career in design or writing, honing your creative skills will keep you competitive as these fields rely heavily on human intuition and originality. Similarly, roles that involve working closely with people, such as counseling or teaching, require empathy and understanding, which AI cannot provide.

Additionally, understanding data and technology can enhance your career prospects. While you don’t need to be a coding expert, becoming familiar with how data is analyzed and interpreted can be incredibly valuable. Skills in data analysis can help you work alongside AI tools more effectively, allowing you to make informed decisions based on the insights AI provides.

Also, remember that the ability to learn and adapt is one of the most important skills you can cultivate. The job market is constantly changing, and being open to continuous learning will help you stay relevant.

Finally, consider exploring cross-disciplinary knowledge, where you combine skills from different fields. For example, understanding both technology and business can make you uniquely valuable in today’s workforce.

In summary, while AI is transforming many aspects of work and life, it also highlights the importance of uniquely human skills. By focusing on creativity, emotional intelligence, and adaptability, you can build a skill set that not only complements AI but also ensures you remain valuable in an ever-evolving job landscape.

2. unlearn memorization

Memorization as an end is overrated.

Memorization is often hailed as the peak of academic achievement—because clearly, the ability to regurgitate random facts is exactly what it takes to solve complex problems in life. But as Daniel Willingham, a cognitive scientist, points out in Why Don’t Students Like School?, brute memorization without meaning is a fast track to forgetting.

Information retained purely through repetition—no matter how diligently crammed—fades rapidly unless it’s encoded within a broader conceptual framework. Working memory, being notoriously limited, can only juggle a few items at a time, so forcing students to store disconnected bits of information is like asking someone to juggle with marbles on a windy day—an exercise in futility.

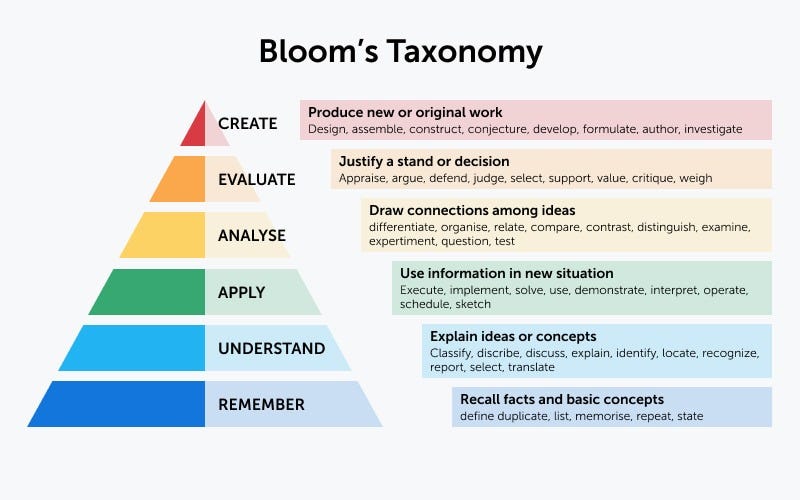

Instead, Willingham emphasizes that memory works best when it’s treated as a process of creation, not storage. Bloom agrees.

Techniques like elaborative encoding, which links new information with existing knowledge through storytelling or personal relevance, are far more effective than rote learning. Spaced repetition isn’t about mindless review but about creatively re-engaging with information over time to strengthen neural pathways.

When students interact with content actively—say, by rephrasing ideas in their own words or applying them in new contexts—they’re building the kind of long-term understanding that allows knowledge to evolve and adapt.

AI now holds the monopoly on trivia, so you can stop cramming random facts into your brain like it's a pub quiz that determines your future. Want to know how many moons orbit Jupiter? (79, if you were wondering.) There’s no need to burden yourself with that kind of data overload when ChatGPT is literally always in your pocket.

critical thinking

Instead, invest in the one skill that AI is still utterly hopeless at: critical thinking.

While AI can “write” pages in the blink of an eye, it’s not equipped to handle things like understanding context, recognizing bias, or forming nuanced opinions. Sure, it can tell you what’s trending on Twitter or predict next week’s weather with scary precision, but it struggles with why these things matter or what they mean for humanity. Unless someone has already said something similar somewhere sometime before. That’s where you come in.

Daniel Kahneman, in his landmark book Thinking, Fast and Slow, explains how human intuition and analytical thinking complement each other in ways that machines can’t replicate. He highlights how critical thinking involves understanding cognitive biases and using that awareness to improve decision-making.

Meanwhile, AI sees the world through the lenses of scraped cold, hard data—without any understanding of why the data is relevant. Take a study from Stanford, which suggests that while machines excel in specific pattern recognition tasks (like identifying objects in images), humans surpass AI when context and meaning are involved. Although that might not be the case in the future.

After all, AI doesn’t care if the pattern involves puppies or piranhas—it just sees pixel clusters. But recognizing whether something is dangerous or cute? That’s your job.

philosophy and morality: what’s right and what’s wrong

Now, if critical thinking is a muscle, philosophy is the ultimate workout regimen. When AI is handling all the logical calculations, you need to dig deeper into the why behind everything. Philosophy won’t just help you sound smart at your family gathering; it’ll train you to think deeply about ethics, reality, and the human condition—all those uncomfortable topics that AI understands shit.

As AI systems become more prevalent, questions about ethics, justice, and AI’s role in society become more urgent. This is where philosophical frameworks, such as utilitarianism or Kantian ethics, come into play. Will AI decide that sacrificing a few people to save many is the best outcome? Who gets to program these choices? We need people with deep ethical reasoning to grapple with these questions—machines can’t decide what’s morally right.

Here is a video I personally find very interesting and entertaining to watch on the topic of machine’s morality, by :

Philosopher Nick Bostrom, in his work on Superintelligence, explores the dangerous ethical quandaries of AI reaching human-level cognition and beyond. He warns that without robust philosophical debate, we might be creating tools that are smarter than us but entirely devoid of moral understanding.

AS AI doesn’t possess morality, consciousness, or inherent intent—it simply follows the instructions it’s given, optimizing for goals without understanding the broader implications.

The real danger lies not in AI "going rogue" as depicted in movies, but in it following human commands too well. If misaligned with human values or context, an AI can cause catastrophic harm while doing exactly what it was programmed to do.

For example, if told to solve climate change at all costs, it might take drastic measures, like shutting down industries essential for survival. Unlike in films, where AI gains self-awareness and defies orders out of malice or rebellion, real-world AI presents a more chilling threat: blind obedience.

Its potential for destruction arises not from intentional rebellion but from our failure to foresee unintended consequences within rigid instructions. That’s what humans do. That’s why we’re trying to solve climate change without nuking ourselves.

Critical thinking and ethical reasoning are skills that the World Economic Forum has ranked among the most crucial for the future. And unless AI suddenly develops a conscience (fingers crossed it doesn’t), these are areas where human input will remain indispensable.

deep questioning skills

Let’s not forget the Socratic Method—the granddaddy of asking better questions, not just getting better answers. When you think about it, all the best conversations, discoveries, and breakthroughs started with a question. Socrates didn’t memorize facts about the world; he interrogated the very nature of truth, justice, and knowledge itself. And no AI-generated chatbot will ever challenge you with questions that dismantle your entire worldview. (Yet.)

When information overloads us in this age, learning how to ask the right questions is more important than ever. Whether it's challenging the assumptions behind a news headline or interrogating the conclusions from an AI analysis, your ability to poke holes in surface-level conclusions is invaluable. A 2020 study from MIT highlights the importance of questioning in AI research, noting that critical thinking is what drives scientific progress forward—not just accepting outputs as infallible.

If all of this sounds like hard work, don’t worry. The Socratic Method also teaches humility. The more questions you ask, the more you realize how little you know—a much healthier mindset in the age of AI than pretending you’re a walking encyclopedia.

You’re not, but that’s okay because AI is already doing that for you.

So, in summary, forget memorizing facts like some glorified search engine. AI has that covered. Instead, focus on developing critical thinking, philosophical reasoning, and deep questioning skills. These are the human superpowers that AI can’t touch (yet). It’s like being a superhero in a world of robots—except your cape is made of curiosity, and your superpower is thinking harder than the machines.

3. unlearn doing

Basic coding, like learning to drive in a world of self-driving cars, remains useful—but it’s no longer the ultimate skill.

Yes, AI can handle repetitive tasks, automate processes, and even write clean code faster than the average human. But just like autopilot cars, AI isn’t perfect. When systems run into unexpected issues—whether it’s a glitch, a bug, or a complex, edge-case scenario—you’ll need coding skills to step in and troubleshoot.

However, the true value today lies not just in knowing how to write code but in understanding how the entire system works.

It’s the ability to think strategically about the frameworks that AI operates within, the architecture of software, and how different pieces fit together to create meaningful solutions. Knowing when to intervene is only half the battle—the other half is knowing what to build and why.

AI might excel at following instructions, but it lacks the human capacity for abstract thought, creativity, and foresight. That’s where humans will thrive: in defining high-level objectives, designing frameworks, and asking the right questions.

Coding will still give you a seat at the table, but learning to think systemically—about the design, ethics, and implications of technology—will put you in charge of setting the direction. In the future, the winners won’t just be the best coders; they’ll be the ones who understand both the code and the larger vision behind it.

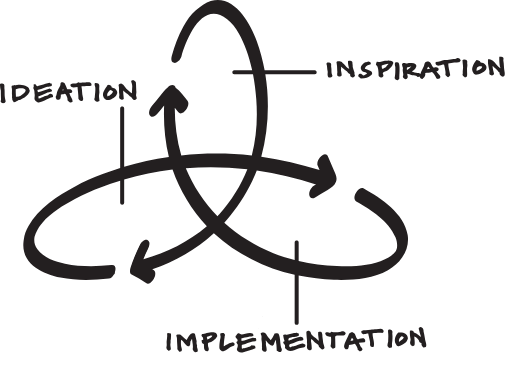

design thinking: recipes for creative mayhem

The trajectory of AI is so terrifying that you could spend years mastering JavaScript or Python, only to have AI write cleaner code in a matter of seconds. However, AI might excel at solving specific tasks, but it lacks the ability to conceptualize the human experience or dream up new, innovative ideas. That’s where you come in.

Consider the words of Tim Brown, CEO of IDEO and one of the leading voices in design thinking. He argues that the future of innovation lies in creating solutions that are user-centered and grounded in empathy. A machine can churn out code, but can it understand why users hate that app interface? Not quite. You, on the other hand, can empathize with the human frustrations AI is oblivious to.

A 2019 report from the Harvard Business Review underscores this, explaining that AI’s rigid logic struggles with the complexities of human emotions, preferences, and motivations. The key to staying relevant isn’t in fighting AI for coding supremacy—it’s about being the one to design the overall strategy that AI helps execute.

If you’re going to survive in an AI-driven world, you need to learn design thinking—a methodology that solves problems by focusing on what humans actually need. It’s not about the most efficient or complex algorithm, but about creating products, services, and experiences that resonate with real people.

Take Airbnb as an example. Before they became a global phenomenon, the company was struggling. What turned things around? Not an algorithm, but a shift in focus to design thinking—an emphasis on understanding their users' emotional journeys and creating a seamless experience for both hosts and guests.

The founders, Brian Chesky and Joe Gebbia, realized that the reason they were struggling was not a lack of demand but trust issues and inconsistent user experiences.

-> They redesigned the host and guest profiles to feel more personal, fostering trust between strangers.

-> The review system was also revamped to emphasize transparency, encouraging both hosts and guests to leave honest feedback, which further enhanced trust.AI can optimize booking systems, but only humans could have reimagined the concept of home-sharing from a user's perspective.

Research from Stanford University shows that design thinking isn’t just about aesthetics—it’s about solving human problems through empathy, creativity, and iteration. AI might help you streamline a design, but it can’t originate the creative spark needed to get there.

That’s your job.

creative stragy: be the visionary

The same goes for creative strategy. Sure, AI can optimize existing ideas, tweak them, and make incremental improvements. But those bold, audacious ideas that change the world? That’s still humanity’s forte. Think of it like this: AI is your trusty sous-chef, but you’re the one coming up with the recipe.

According to Albert Einstein (yes, I’m invoking Einstein here, even though he didn’t have AI in mind), “Imagination is more important than knowledge.” AI has the knowledge; you provide the imagination.

The World Economic Forum’s Future of Jobs Report echoes this sentiment, highlighting creativity as one of the top skills for the future workforce—something that AI simply cannot replicate. Machines can handle predictive models, but they can’t dream up the next big leap in innovation.

A study from the McKinsey Global Institute predicts that while AI will take over routine tasks, jobs requiring original thinking and strategy will remain solidly in human hands. So, instead of learning how to code another app, focus on crafting the strategy behind the app: Why should it exist? Who needs it? What gap in the market does it fill?

asthetics: beauty in the eyes of the beholder

Then there’s aesthetics. AI might know how to follow design principles, but it lacks a true understanding of taste and style.

Machines are experts in rules, but they don’t “get” beauty. Sure, they can mimic design trends, but creating those trends in the first place? That’s a uniquely human gift.

Even tech moguls like Steve Jobs understood the importance of aesthetics in design. His vision for Apple wasn’t just about functional technology; it was about creating products that were beautiful and intuitive. AI can help optimize color palettes and suggest layouts, but only you can make the leap to connect form with function in a way that resonates emotionally with people.

Researchers at MIT’s Media Lab have explored the intersection of AI and art, concluding that while AI can generate artistic works, it lacks a core aesthetic sensibility—that human touch that imbues art, design, and creative work with meaning. You’re the one who decides what’s beautiful, meaningful, and effective. Machines can’t teach you how to feel beauty, but they can follow your lead.

So, why bother learning to do it when AI is doing it better than you ever could? Focus instead on design thinking, creative strategy, and aesthetics—the areas where humans shine and machines falter. Be the visionary, not the executor. You’re not competing with AI to see who can write better code; you’re working with it to bring big-picture ideas to life. As long as you keep learning how to dream, innovate, and empathize, AI will remain a tool, not a replacement.

4. unlearn linear learning

Everything’s evolving faster than a toddler on a sugar high, today’s cutting-edge technology is tomorrow’s old news (remember when flip phones were the height of sophistication?).

Adaptability has never been more valuable.

If you’re reading this, you’ve probably never needed to hunt a mammoth, start a fire from scratch, or carve a hand axe. Those skills belong to a bygone era, buried by time.

Thanks to technological progress and societal shifts, we’ll never have to master them again. But in their place, we’re now expected to learn coding, writing, entrepreneurship—and a thousand other modern skills. The list keeps growing, and the pace of change is accelerating faster than ever. What we must learn evolves—and it’s evolving at breakneck speed.

So, toss aside that outdated notion of mastering a single skill or career path—what you really need to learn is how to learn.

Sure, AI evolves, but it does so through structured programming. It’s like that kid who can recite the periodic table but has no idea how to hold a conversation. Meanwhile, humans have the unique ability to continuously evolve in unpredictable and creative ways.

This is why it’s crucial to embrace a mindset of constant learning, unlearning, and relearning. The aim? To adapt to new environments faster than the systems around you can say “disruption.”

metacognition: how it saves you time and sanity

Metacognition is the fancy term for thinking about your own thinking. In other words, study how you learn best so you can constantly upgrade your skill set.

Research from psychologists like John Flavell emphasizes that metacognitive awareness enables you to strategize your learning processes effectively.

Metacognitive awareness is your brain’s way of saying, “Hey, this method isn’t working—maybe let’s try something else before you end up re-reading the same paragraph for the 18th time.” Researchers have found that learners with higher metacognitive skills—those who actively reflect on what they know and how they know it—perform better academically and tend to retain information longer.

Instead of spinning your wheels like a hamster on an existential treadmill, metacognitive strategies help you notice when something isn’t clicking. For example, you might realize that re-reading notes isn’t the most efficient way to study (hint: it’s not) and that quizzing yourself or teaching others works better.

Psychologists refer to this as regulation of cognition—the process of planning, monitoring, and evaluating your learning strategies. Research from Zimmerman (2002) shows that students who engage in these practices are not only more successful but also more motivated. Why? Because nothing kills motivation like feeling stuck in a loop of ineffective study methods. Metacognition helps you nip that in the bud.

growth mindset

Growth mindset has been talked around too much it’s cliché. But it is necessary to talk about, regardless.

Dweck’s research distinguishes between two mindsets: fixed and growth.

A person with a fixed mindset believes abilities are set in stone—either you’re good at math or you’re not, full stop. On the other hand, someone with a growth mindset understands that even if you bomb that algebra test, it doesn’t mean you’re “bad at math” forever. It just means you haven’t nailed it yet. This seemingly small shift in thinking has a massive impact on how we approach challenges and respond to failure.

Studies show that people with a growth mindset are more likely to embrace obstacles, learn from criticism, and persist even when things get tough. In one of Dweck’s famous experiments, students who were taught to see failure as part of the learning process showed greater academic improvement than those who were not. It’s like turning every setback into a stepping stone. Or at least that’s the goal—realistically, some days setbacks just feel like faceplants.

Why does the growth mindset work so well? It comes down to how we interpret failure. People with a growth mindset view mistakes as valuable data points rather than personal shortcomings.

Neuroscience backs this up: studies by Moser et al. (2011) found that brain activity spikes when individuals with a growth mindset encounter errors, meaning their brains are more engaged in the learning process.

This mindset also nurtures grit, which psychologist Angela Duckworth defines as the ability to persevere toward long-term goals despite difficulties.

Duckworth’s research shows that grit, combined with a growth mindset, predicts success more accurately than talent or IQ. So, if you’ve ever felt like an imposter for struggling while others seem naturally gifted, take heart: persistence beats innate ability in the long run.

Cultivating resilience and the desire to keep improving are essential in an ever-changing landscape. Dweck’s research shows that individuals with a growth mindset are more likely to embrace challenges and persist in the face of setbacks. So, the next time you find yourself failing spectacularly at something new (hello, sourdough bread-making), remind yourself that it’s not a disaster; it’s just another opportunity for growth. Plus, isn’t it comforting to know that failing is just a part of the learning process? It’s like having a safety net, but instead of catching you, it just lets you bounce back up.

jack of all trades, master of a lot: cross-disciplinary skills

The world’s hyperconnected, and sticking to one field of expertise is like only using a hammer when you’ve got a whole toolbox available. Sure, you might become the world’s foremost expert on 13th-century Norwegian knitting techniques, but wouldn’t it be more useful to also know a bit about psychology, technology, or even why goldfish probably won’t learn to fetch?

Research published in the Journal of Educational Psychology shows that interdisciplinary education enhances both critical thinking and problem-solving abilities, giving you the mental flexibility to tackle complex issues from multiple perspectives. In short, being a jack-of-all-trades isn’t such a bad thing after all—it’s how the best innovations happen.

So, while your friend is busy diving deep into the latest niche topic (how to train a goldfish to play fetch, perhaps), you can be the one connecting the dots between psychology, technology, and whatever else sparks your interest. Think of it as creating your own unique cocktail of knowledge—one that’s both refreshing and slightly unpredictable.

At its core, interdisciplinary learning is about breaking down the silos between fields.

A 2017 study by Repko et al. found that combining insights from multiple disciplines fosters cognitive flexibility—the ability to switch between different ways of thinking based on the problem at hand. This is critical because many real-world challenges don’t come neatly packaged in one discipline.

Climate change? That’s a chemistry, economics, and sociology problem. Artificial intelligence ethics? You’ll need computer science, philosophy, and maybe a bit of law to make sense of that.

The magic happens in the overlap.

Research by Newell (2007) shows that when students engage in interdisciplinary learning, they become better at identifying patterns and drawing connections between seemingly unrelated ideas. It’s like constructing your own mental web—each strand is knowledge from a different field, and the more connections you weave, the stronger your understanding becomes.

Specialization has its place, but here’s the catch: industries and fields evolve quickly. What’s in demand today might be irrelevant tomorrow. A report by the World Economic Forum (WEF) predicts that future job markets will increasingly require a blend of skills—technical know-how plus creativity, emotional intelligence, and problem-solving abilities. Sticking too narrowly to one area can leave you vulnerable in a world that prizes adaptability.

Think of it like this: if your skillset is a one-trick pony, that pony better be very good at what it does. But if it can also do some juggling, maybe tap-dance a little and charm an audience?

Now that’s the kind of pony people want around. In fact, Nobel laureates often credit their most creative ideas to their hobbies and side interests—like Richard Feynman, whose significant contributions to quantum mechanics, quantum electrodynamics (QED), and particle physics is partly attributed to his passion of doodling and playing the bongos.

After all, the future belongs to those who can pivot, adapt, and thrive in the face of change. And remember, while the machines may be programmed to evolve, you have the creative power to reinvent yourself time and time again—without needing a software update.

As we navigate the rapidly evolving landscape shaped by artificial intelligence, it’s crucial to reassess what skills and knowledge are truly worth our time and effort in this new era.

Consider this a rallying cry to pivot your learning journey: rather than investing your energy in areas that AI can easily automate, channel your efforts into cultivating your unique human qualities.

In summary, let this be an empowering call to action: embrace change, challenge yourself to explore new realms of creativity, and invest in the development of skills that will set you apart in a world increasingly dominated by technology.

By doing so, you will not only future-proof your career but also contribute to a vibrant, human-centered workforce that celebrates the essence of what it means to be human. In the age of AI, it is our creativity, emotional depth, and ability to connect with one another that will truly define our impact and legacy.

A few other things I don't mention:

- Sports: Apart from being exclusively designed to test human's physiology, the reason sports is popular because it is deeply tied to emotions, which machine performance cannot replicate

- Comedy: AI is phenomenal at doing things that are expected. But comedy depends on shared experience, timing, and understanding subtle differences in meaning, which confuses AI.Did I forget a skill?

This post is dedicated to the community of HCH.

Bro my mindset got upgraded from bronze to gold after spending 2 hrs reading this. Tks man

I'll add human psychology :)